Imitation Learning with Generative Methods

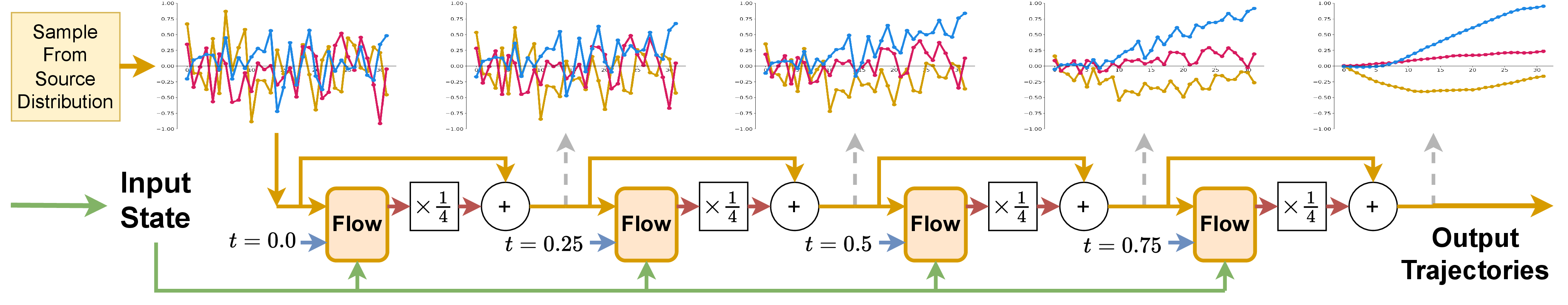

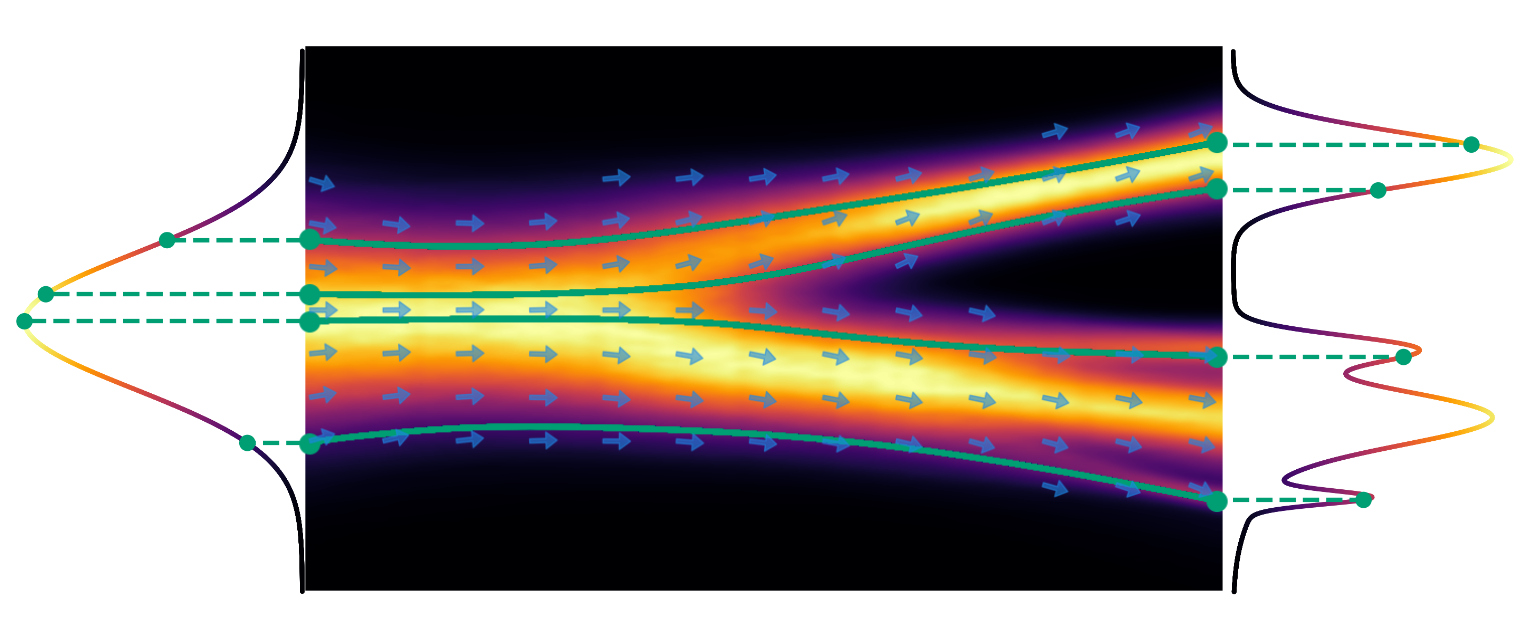

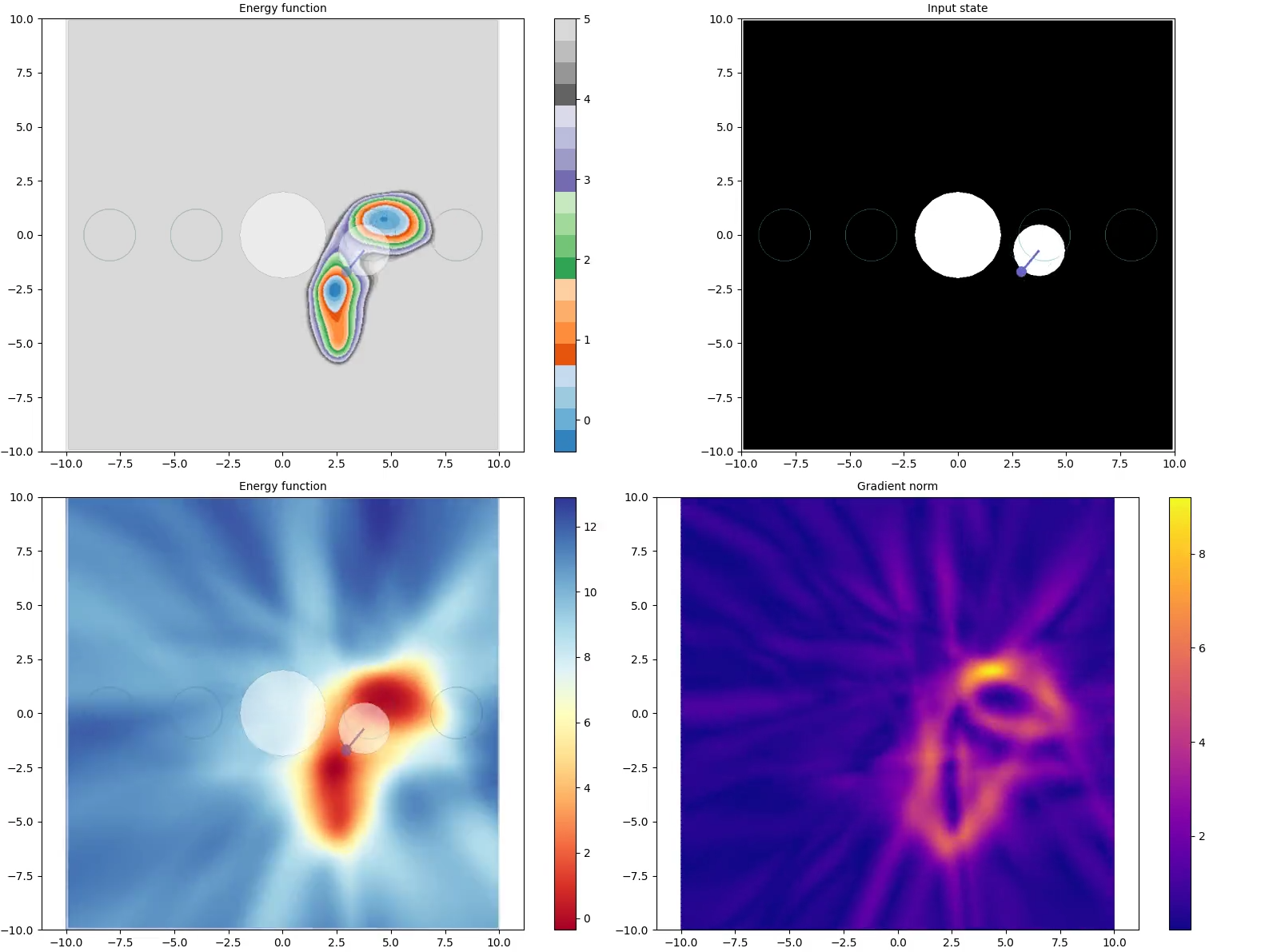

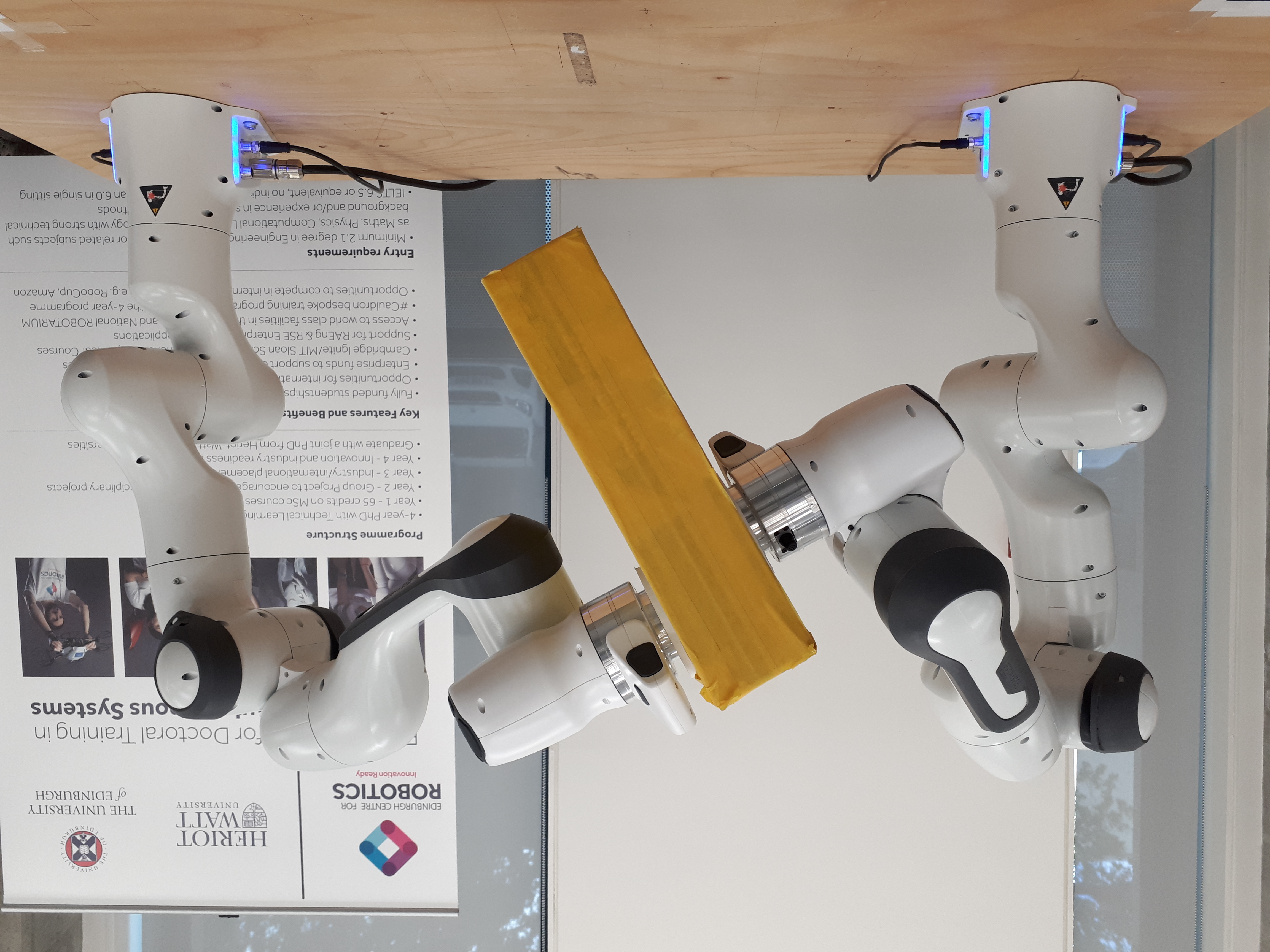

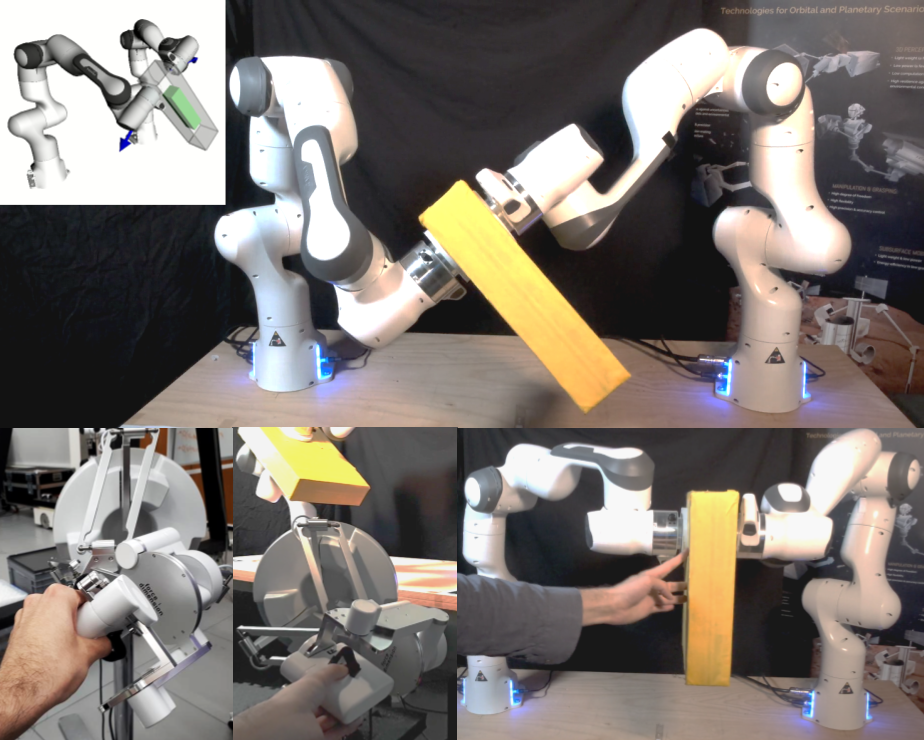

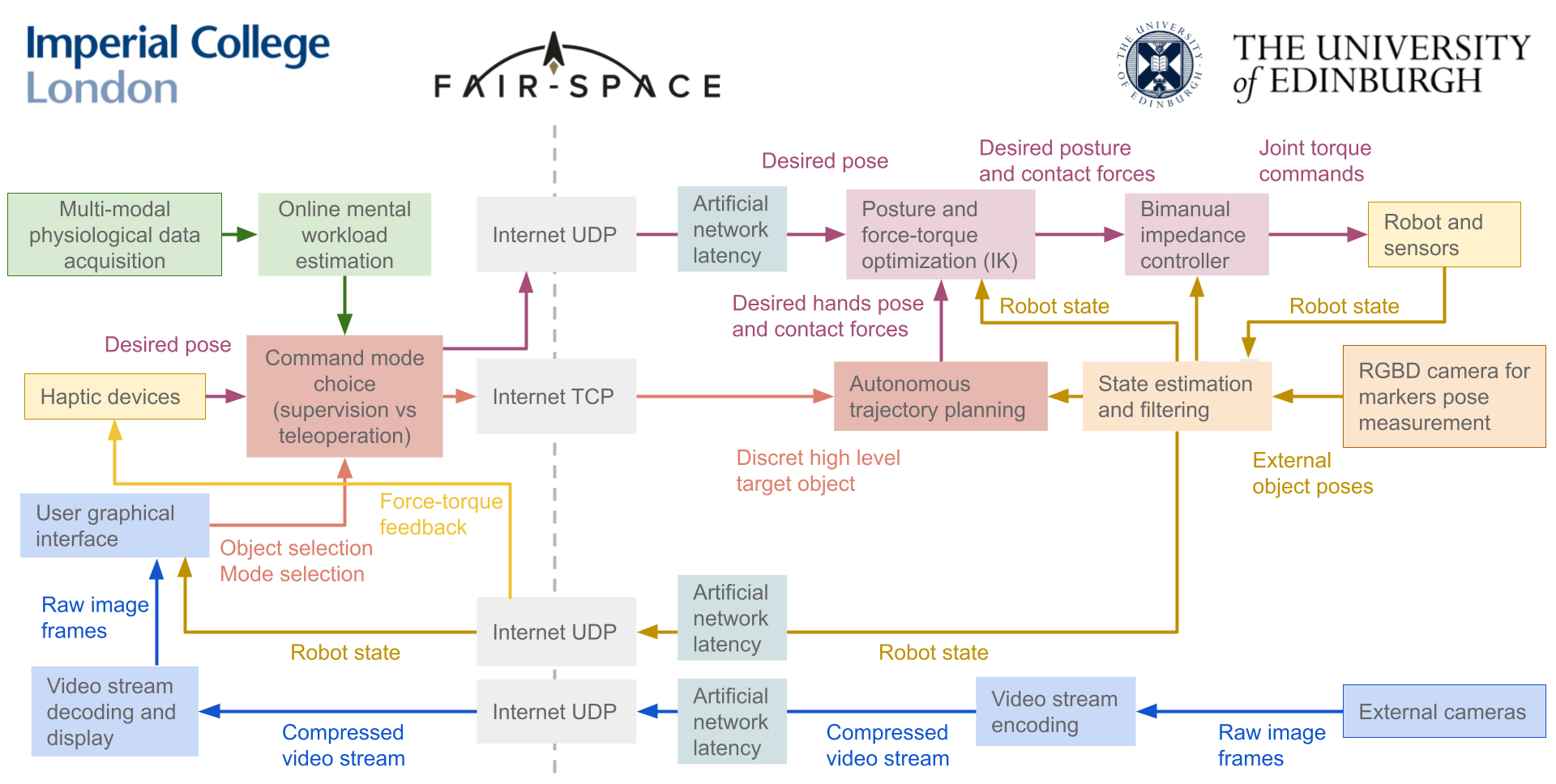

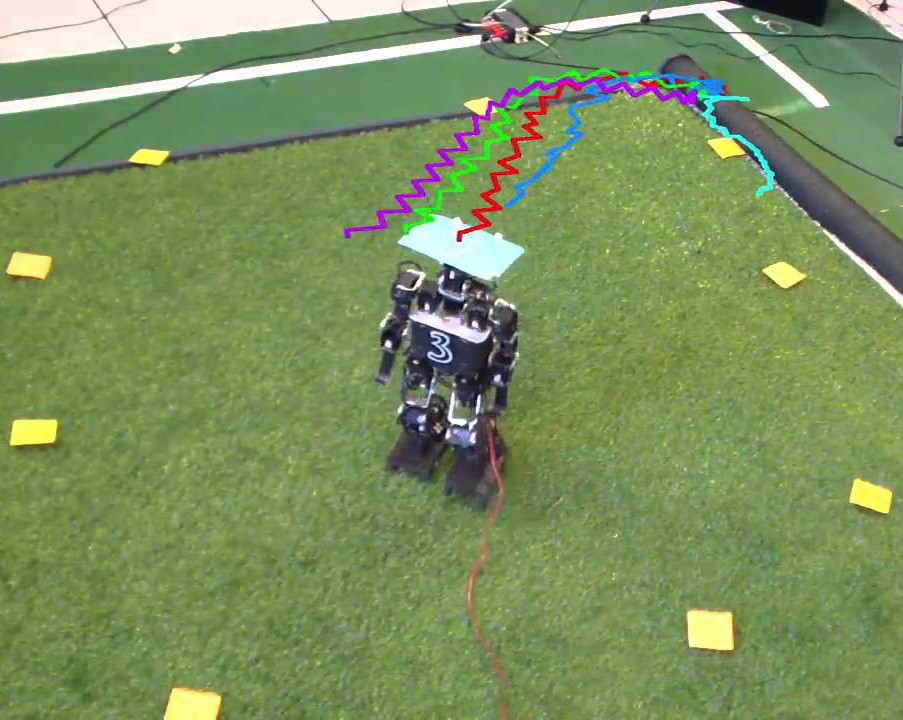

Imitation learning (behavior cloning) leverages human teleoperated demonstrations to autonomously solve tasks without designing a reward function. Recent advancements in imitation learning rely on generative methods, such as Diffusion and Flow Matching, which excel at modeling complex multi-modal probability distributions. Originally developed for images, these methods can effectively generate high-dimensional distributions, enabling the creation of policies that produce not just single actions but entire future command trajectories. This capability enhances time coherence and improves the feedback-feedforward trade-off of the reactive policy.

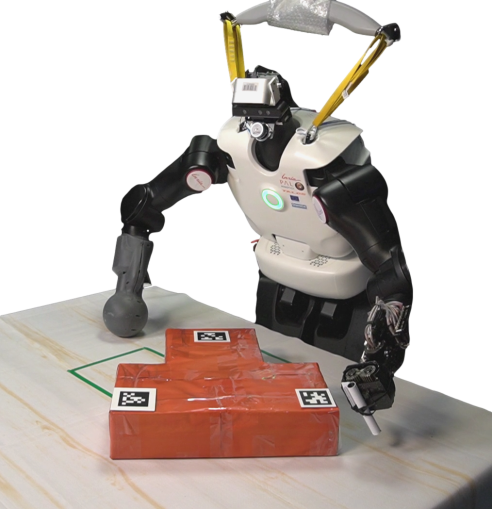

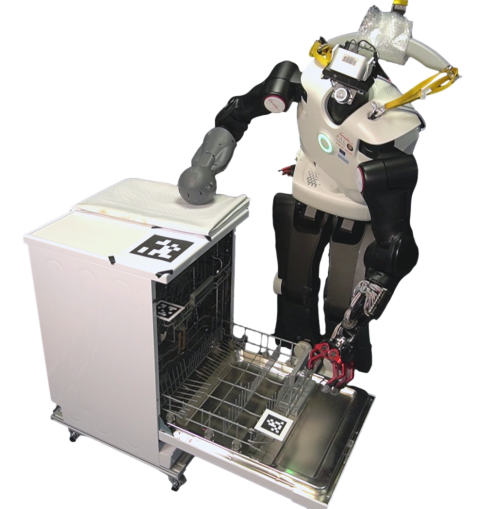

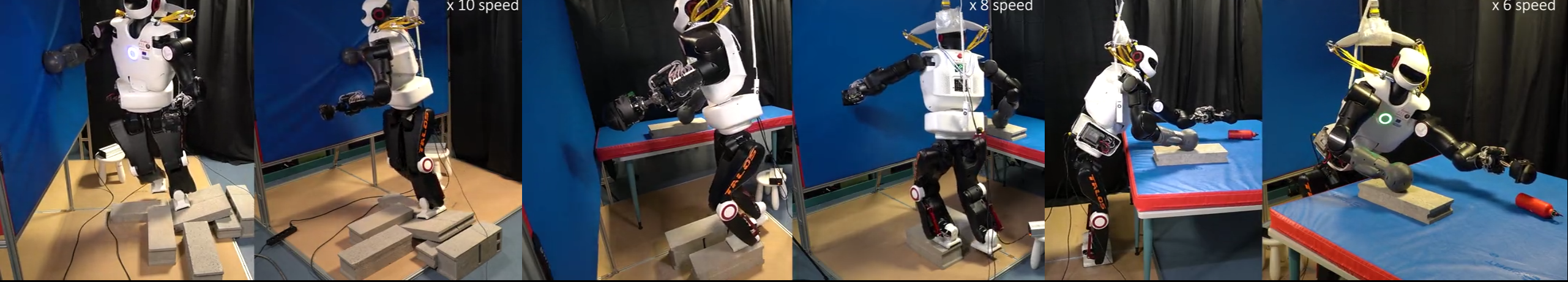

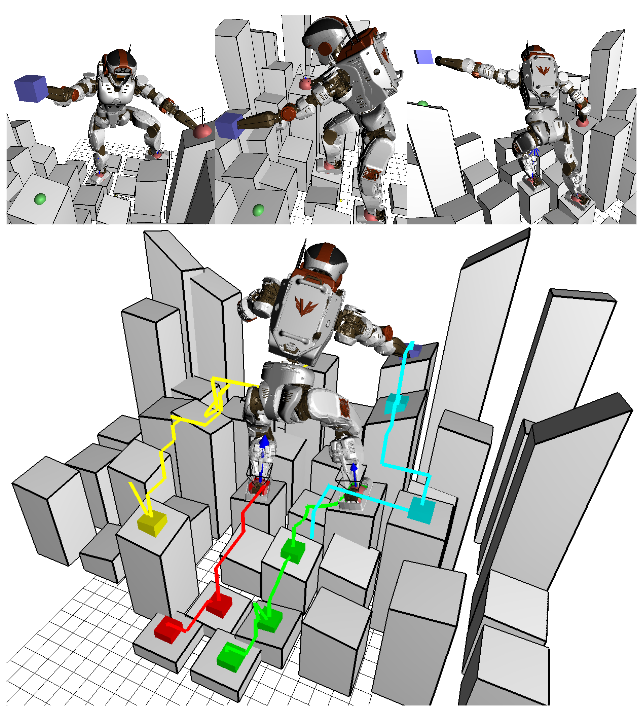

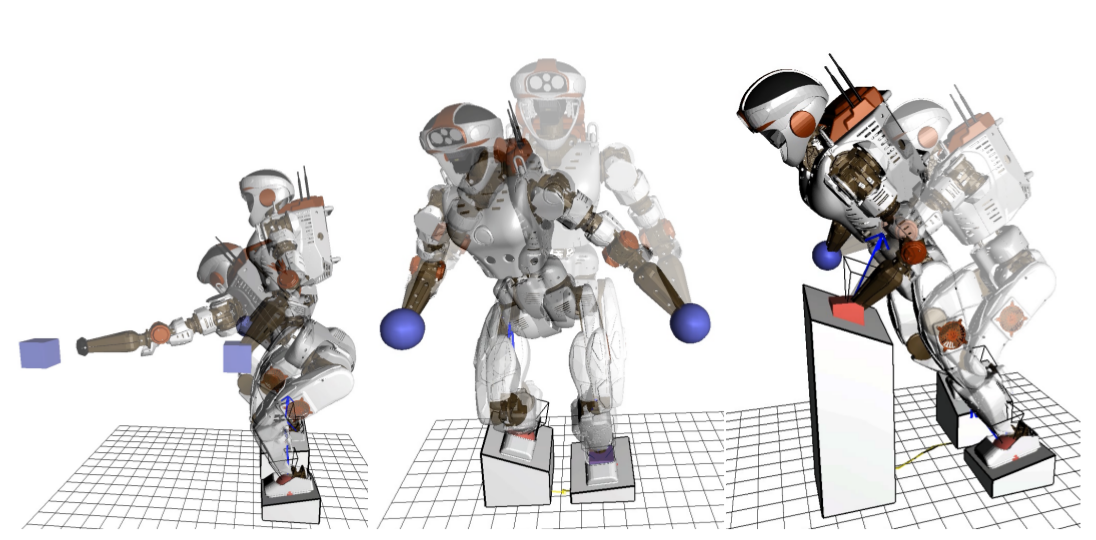

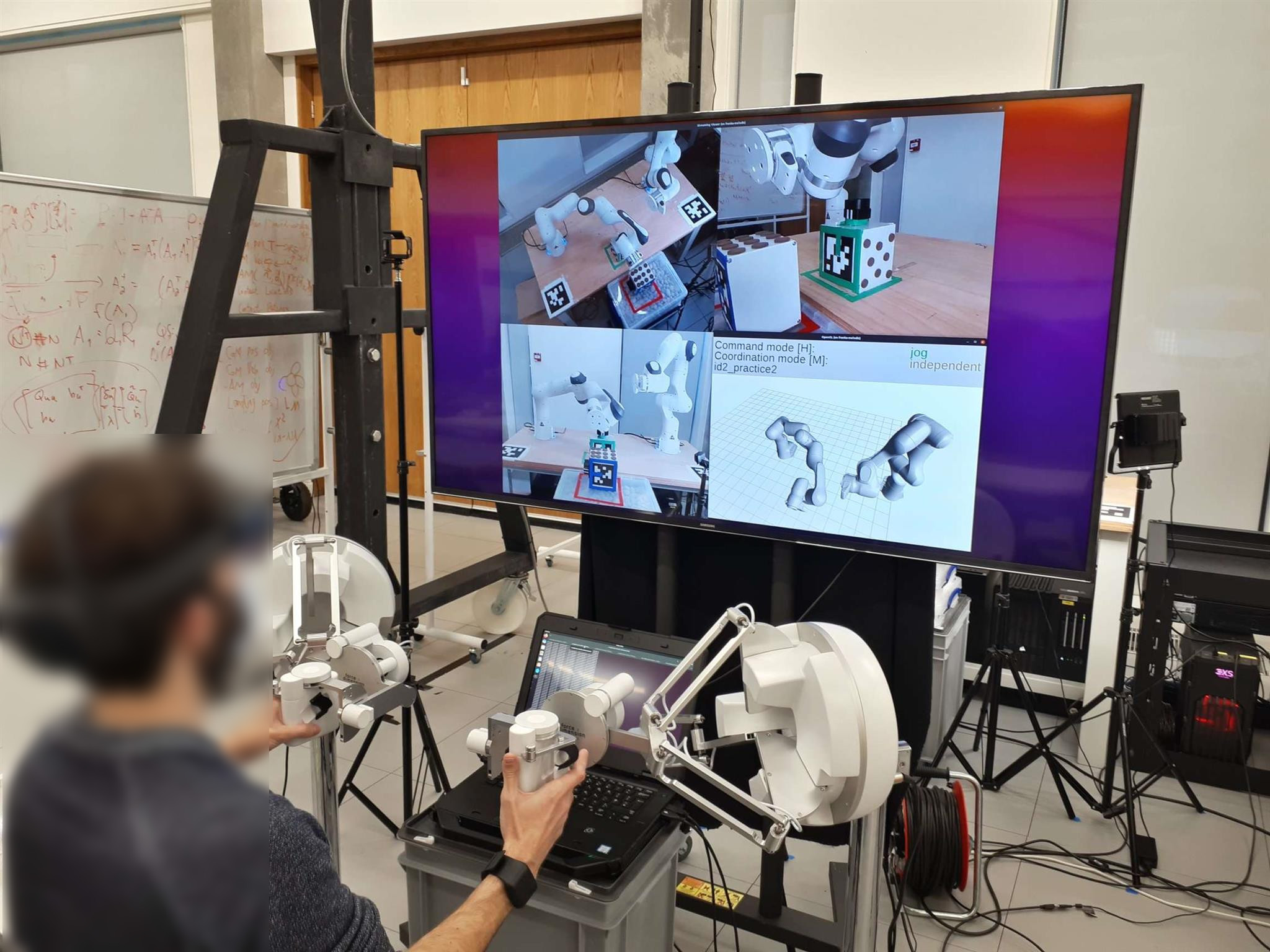

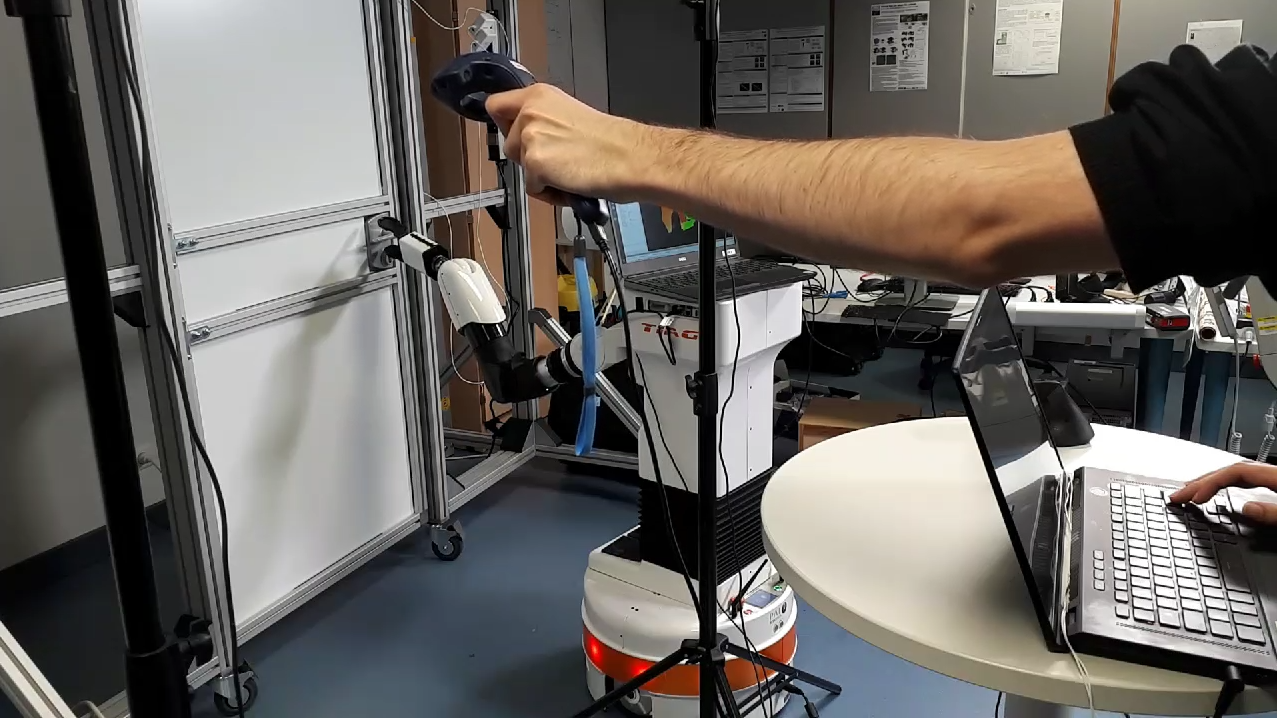

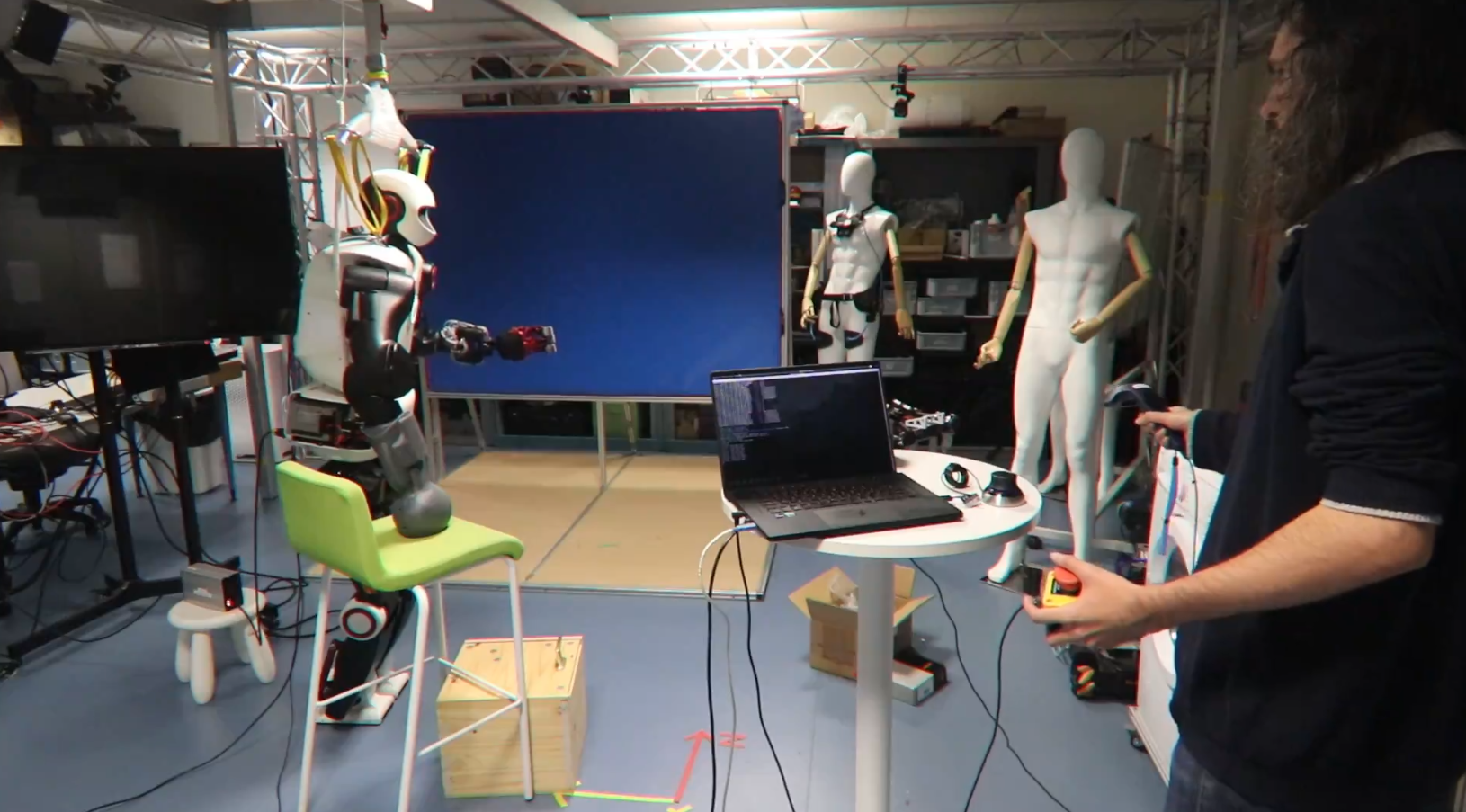

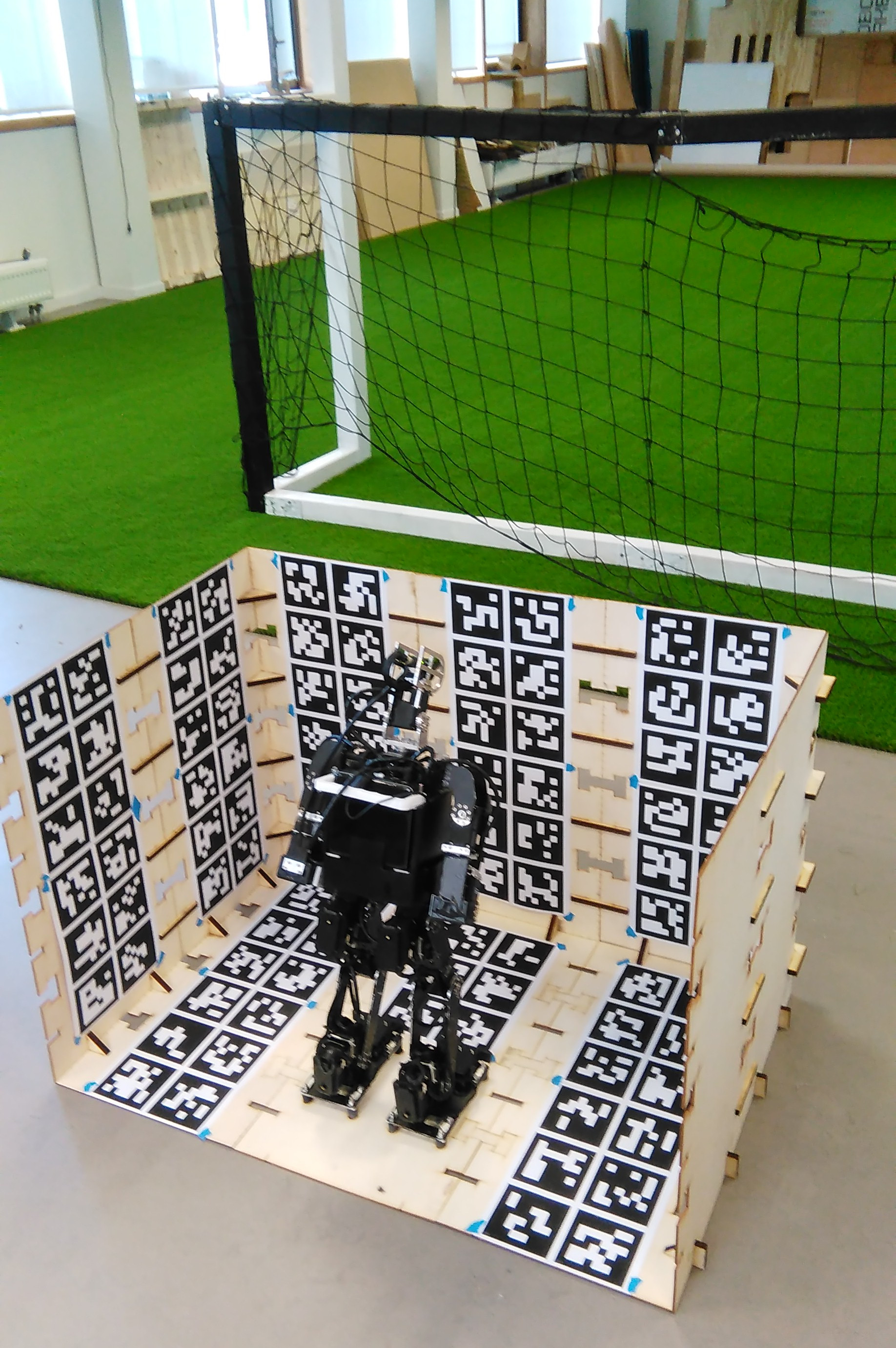

We proposed an imitation learning pipeline for the Talos humanoid robot using Flow Matching, designed to perform multi-support tasks. This approach enables the robot to extend its manipulation capabilities by learning "common sense" from human demonstrations about when and where to establish additional contact. Additionally, we demonstrate that a shared autonomy-assisted teleoperation method, utilizing the policy learned from demonstrations, effectively assists in tasks outside the training distribution.